I spoke last week at the OK SPIN meeting on Behavior-Driven Development. Here are the slides and notes from that meeting.

If you would like to get the code I used in the demo, let me know.

https://docs.google.com/file/d/0B4xOrnUuWgtPdUJvOEt3dXdJZUU/edit?usp=sharing

The Red Earth QA SIG is an Information Technology organization that focuses on improving the quality of software implementation projects by sharing information on testing tools and techniques. This also includes networking with peers that may or may not be full-time Quality Assurance staff.

Tuesday, August 06, 2013

Wednesday, July 17, 2013

Test Automation for Bug Fix Verification

Recently, I've been looking at Behavior Driven Development. The gist of this approach is that you write stories that describe your system behavior (or desired system behavior) and then feed that into your test system.

Here is a sample story.

This highly-structured (plain English) format can be parsed and used to direct your automation code. Originally, this was meant as a way to describe new functionality.

But consider this. Suppose you wrote stories like this for the expected behavior as a part of each defect report. Additionally, suppose you had a system that would:

Here is a sample story.

This highly-structured (plain English) format can be parsed and used to direct your automation code. Originally, this was meant as a way to describe new functionality.

But consider this. Suppose you wrote stories like this for the expected behavior as a part of each defect report. Additionally, suppose you had a system that would:

- go through each fixed defect for a new build

- extract any attached story

- run that story through your test automation system

- if passed, close the ticket

- if failed, attach a screenshot/log file/etc. of the failure

How much time would that save? How much quicker could new builds be verified?

I'll be giving this more thought and come up with something I hope finds its way to a future blog post.

Monday, July 15, 2013

OK SPIN - Summer Meeting

Wednesday, July 31st, 6pm

Come to the Summer OK SPIN meeting. At this meeting, we will look at an approach to producing software that has lots of interesting features:

- Can be applied to agile as well as waterfall approaches (even when there is no process!)

- Supplements (or establishes) clear documentation of system functionality

- While there is programming support for this, you can still get a benefit even without using the technology side.

- This documentation becomes direct input to system testing, requiring no human interpretation once the testing framework is configured.

- Changes to documentation are immediately available for system testing

- There are programming tools (including IDE plugins) to streamline the process of using this documentation for system testing.

- The testing output uses the original documentation to indicate which tests pass and which tests fail.

(Can you guess what type of tool this is?)

From 6pm to 7pm, come and mingle with other software professionals.

From 7pm to 8pm, we will have the presentation.

Hope to see you there!

- Can be applied to agile as well as waterfall approaches (even when there is no process!)

- Supplements (or establishes) clear documentation of system functionality

- While there is programming support for this, you can still get a benefit even without using the technology side.

- This documentation becomes direct input to system testing, requiring no human interpretation once the testing framework is configured.

- Changes to documentation are immediately available for system testing

- There are programming tools (including IDE plugins) to streamline the process of using this documentation for system testing.

- The testing output uses the original documentation to indicate which tests pass and which tests fail.

(Can you guess what type of tool this is?)

From 6pm to 7pm, come and mingle with other software professionals.

From 7pm to 8pm, we will have the presentation.

Hope to see you there!

https://plus.google.com/events/cdhb06s2kncfm2ch2chc32q93b8?partnerid=gplp0&authkey=COfpg_7Xlq-JGw

Thursday, May 30, 2013

Triggers

Michael Lopp is one of my favorite authors when I think about being a software professional.

This article is a great view into working with others.

http://www.randsinrepose.com/archives/2013/05/28/triggers.html

This article is a great view into working with others.

http://www.randsinrepose.com/archives/2013/05/28/triggers.html

Saturday, May 18, 2013

Fill out a survey about Software Testers

Hey Software Testers!

Help out Randy Rice by filling out a short survey.

http://randallrice.blogspot.com/2013/05/help-with-survey-on-software-test.html

Tuesday, April 30, 2013

How to de-motivate your team

http://randallrice.blogspot.com/2011/12/video-on-how-to-de-motivate-your-team.html

Randy is a great guy. Here is a great sample of his insight.

Randy is a great guy. Here is a great sample of his insight.

Tuesday, April 23, 2013

Videos of Computer Technology

I've spoken about the Security Now! podcast before. The AskMrWizard.com site has posted videos that illustrate the concepts from the podcast

http://www.askmisterwizard.com/EZINE/SecurityNow/SecurityNowIllustrated.htm

Enjoy!

http://www.askmisterwizard.com/EZINE/SecurityNow/SecurityNowIllustrated.htm

Enjoy!

Thursday, March 28, 2013

4 Things to Remember (about metrics, from Cem Kaner)

Cem Kaner is one of the few researcher / educators out there that focuses on Software Quality Assurance full-time.

I ran across this article (linked below) where he talks about metrics. Here is the final slide, but you'll want to review the whole thing to get the details.

4 Things to Remember1. Yes, most software metrics are (to some degree) invalid.

However, that doesn’t reduce the need for the information we are

trying to get from them.

2. I think it’s part of the story of humanity that we’ve always worked

with imperfect tools and always will. We succeed by learning the

strengths, weaknesses and risks of our tools, improving them

when we can, and mitigating their risks.

3. We need to look for the truths behind our numbers. This involves

discovery and cross-validation of patterns across data, across

analyses, and over time. The process is qualitative.

4. Qualitative analysis is more detailed and requires a greater

diversity of skills than quantitative. Qualitative analysis is not a free

(or even a cheap) lunch. We evaluate the quality of quantitative

measures by critically considering their validity. An equally

demanding evaluation for qualitative measures considers their

credibility.

http://kaner.com/pdfs/PracticalApproachToSoftwareMetrics.pdf

Tuesday, January 29, 2013

Winter 2013 Meeting of OK SPIN

https://plus.google.com/events/cjgstjbf50eive03l3fjt8gbsjs?authkey=CJ7x_7zjgbvOjAE

We 've finally settled on a name, OK SPIN! The Oklahoma Software Professionals Interaction Network. Come join other professionals and discuss the challenges and solutions for producing software.

We hope you can join us for our Winter 2013 meeting.

TOPIC: Tools

If you would like to prepare a 5-10 minute demo of a favorite tool, bring it with you and share with the group.

LOCATION: We are on the OU campus at 3 Partners Place on the main floor. (see the map for details)

TIME: Monday, Feb. 11, 6pm-8pm.

AGENDA: 6pm-7pm Networking, 7pm-8pm Presentations.

Refreshments will be served, please feel free to bring a meal to eat

SPONSORS: If you would like to sponsor these meetings, please contact Robert at robert@watkins.net

QUESTIONS: If you have questions, contact Robert at robert@watkins.net

Follow the OK SPIN Community on Google+ and share your own ideas!

Google+ Community page for OK SPIN

https://plus.google.com/communities/108846666538991188637

Thursday, January 24, 2013

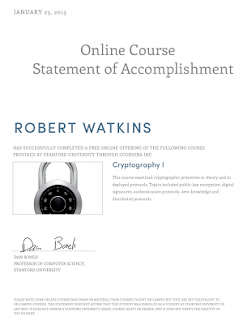

I finished Cryptography I !

I started this course last year and it ended this week. It was a fun course and I enjoyed it very much. Cryptography II starts in a couple months. Yay!

Wednesday, January 23, 2013

Hold off taking "Software Testing" from Udacity

I was hoping to be able to recommend this course to others, but at this time I cannot. There is so much basic information lacking and and the exercises are vague and not based in the material being presented.

Here are some specific examples.

Equivalent tests. The lecture essentially said, 'equivalent tests are tests that test the same thing.' Something more along the lines of http://en.wikipedia.org/wiki/Equivalence_partitioning would have been appropriate.

Specifications. The lecture basically said, 'you won't often get specs, so you will have to build them'. That's a correct statement, but there is quite a bit to do to actually perform this successfully. Talking to people (other than the developer) is conspicuously absent from the discussion. There is a non-developer that often has an expectation of how something should work. A discussion of 'test oracles' would have been appropriate (see http://www.softwarequalitymethods.com/Papers/OracleTax.pdf for an excellent read)

GUI testing. The lecture basically said,'try to randomly fire gui events'. Where is the discussion of modeling use cases as the start of test cases? Where is an attempt to understand the input/output of individual gui elements?

It's not so much that the content is wrong, but that there is no acknowledgement of the wide variety of approaches that are available to approach testing. I get the feeling that the authors of this course have spent the majority of their career in code and not in a manual testing role.

Anyone else have experience with this class?

Thursday, January 17, 2013

Answering Loaded Questions

As a human, I am slow to evolve my behavior. For example, I was recently asked. "What is slowing the pace of development for the new regressions so much?"

In the past, I would have had a snarky response, or just gotten upset. I still got upset, but I didn't speak up at first. I gave it some thought.

Here's what I came up with. I found an article "Indirect Responses to Loaded Questions" from the 1960s. http://www.aclweb.org/anthology-new/T/T78/T78-1029.pdf . It describes how a natural language processor could handle such situations.

Here's the gist:

UPDATE

I thought about this some more. Here are some additional responses, focused on providing solutions, rather than defending or explaining the current state.

In the past, I would have had a snarky response, or just gotten upset. I still got upset, but I didn't speak up at first. I gave it some thought.

Here's what I came up with. I found an article "Indirect Responses to Loaded Questions" from the 1960s. http://www.aclweb.org/anthology-new/T/T78/T78-1029.pdf . It describes how a natural language processor could handle such situations.

Here's the gist:

- Identify the assumptions in the question

- Determine which are valid, invalid or unknown

- Respond with at least one of the following types (called 'Corrective Indirect Responses')

- Answer a more general question

- Offer the answer to a related question

- Correct the assumption presumed to be true and indicate that it is either false or unknown.

So, in my case, the assumptions and their validity are:

- I would know about the pace of developing new regression tests. (this is true)

- The pace of developing the regression tests is slowing. (this is only true in a very narrow view of the situation)

- There is an expected pace for the work. (this is not true)

I'm still thinking about my response. Here is a sample of what I've come up with.

- "As viewed through the project dashboard, the pace of the project has been pretty consistent, even if a bit slower than desired."

- "Before I was asked to coordinate this work, the project had no measurable progress for over a year. It now has measurable progress."

- "When we discussed applying normal project planning methods to this work, I was told that it was too much effort. If we had done that, we could answer this question clearly. We have not asked for any commitments of time for all the team members involved. We have not asked for estimates of the effort to complete the work. Both of these would help manage the progress of this work."

What would you do?

UPDATE

I thought about this some more. Here are some additional responses, focused on providing solutions, rather than defending or explaining the current state.

- "We can certainly discuss the pace of work. That will require agreeing to specific commitments with those doing the work."

- "Let's discuss in detail specific estimates for the remaining work and available time with those doing the work so that we can get a better understanding of what the end date could be."

Tuesday, January 08, 2013

Udacity

Have you or anyone you know taken the software testing course from Udacity?

http://www.udacity.com/overview/Course/cs258/CourseRev/1

I think I'll give it a spin once my cryptography course is done.

http://www.udacity.com/overview/Course/cs258/CourseRev/1

I think I'll give it a spin once my cryptography course is done.

Monday, January 07, 2013

Janitor Monkey

When I first heard about "Chaos Monkey" from Netflix, I was hooked. It's only job is to randomly kill processes to ensure that error handling and recovery works well.

When I heard from Slashdot that "Janitor Monkey" was open-sourced, I again jumped for joy.

From the article:

"Janitor Monkey is part of the so-called Simian Army of at least eight internal management tools, including Latency Monkey, which introduces artificial delays into the system, and Chaos Monkey. Some (but not all) of these tools have been open-sourced."

slashdot (http://s.tt/1y3BK)

slashdot (http://s.tt/1y3BK)

When I heard from Slashdot that "Janitor Monkey" was open-sourced, I again jumped for joy.

From the article:

"Janitor Monkey is part of the so-called Simian Army of at least eight internal management tools, including Latency Monkey, which introduces artificial delays into the system, and Chaos Monkey. Some (but not all) of these tools have been open-sourced."

How many systems that you work on are able to stand up to this kind of self-administered abuse? :)

Subscribe to:

Posts (Atom)